This blog titled Tactile Rendering of Textures using DAQ was originally provided by our friends at Dewesoft. “Haptic Rendering – A Virtual Reality Challenge” details a pioneering project in the field of virtual reality.

Firstly, focusing on the simulation of tactile sensations or “haptic feedback.” This ambitious project was undertaken by students from the Sapienza University of Rome and the National Institute of Applied Science (INSA) in Lyon, with support from Dewesoft, a company specialising in data acquisition and analysis tools.

What was the core objective?

The project’s core objective was to understand and simulate the sense of touch. Firstly, touch is a complex and multifaceted sense involving the perception and manipulation of objects. Whilst tactile rendering is the recreation of touch sensations. In addition, it is seen as a significant technological challenge. However, skin to visual and auditory rendering technologies that have seen substantial advancements in recent years.

How Tactile Rendering of Textures were captured.

The research involved studying the mechanical signals (forces, friction, vibrations) involved in tactile perception. Specifically, when exploring surface textures. This study was part of a broader, multidisciplinary effort. Therefore, involving engineering, neuroscience, and psychology teams to simulate the most complex of the five senses.

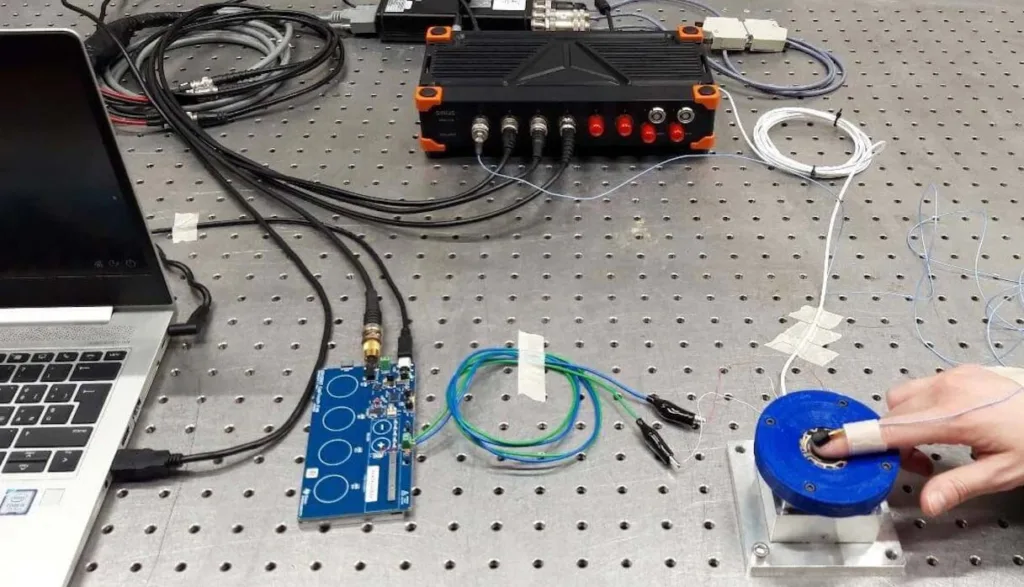

The experimental setup used in this project involved measuring tactile stimuli generated when a finger explores a surface. This setup included a triaxial force transducer and an accelerometer to measure contact forces and friction-induced vibrations (FIV), which are crucial for texture perception. Advanced spectral analysis techniques were used to link these mechanical signals with texture perception.

The team then developed a tactile rendering device using a piezoelectric actuator, driven by a Texas Instruments electronic board, to recreate these textures at a distance. This involved processing the vibrational signal measured from real surfaces and using it to stimulate the user’s fingertip via the actuator. The device’s accuracy was verified by comparing the vibrations induced by actual surfaces and those generated by the device.

Exciting Project Expansion

Furthermore, the project expanded to include a neuroscientific dimension, partnering with the Laboratory of Cognitive Neurosciences at the University of Marseille. This new phase aimed to map the entire chain of tactile perception from surface texture through mechanical stimuli to brain response. It involved measuring both mechanical and electroencephalographic (EEG) stimuli during surface exploration.

Dewesoft’s advanced data acquisition and analysis system was essential in several key aspects of the project:

Measurement of Tactile Stimuli: The Dewesoft system was integral in measuring the tactile stimuli generated when a finger explores different surface textures. This measurement was crucial for understanding the mechanical aspects of tactile perception.

Acquisition of Mechanical Signals: The project involved capturing complex mechanical signals like contact forces, friction, and vibrations (Friction-Induced Vibrations or FIV). Dewesoft’s system, specifically the SIRIUSi DAQ eight-channel in-and-output system, was used to acquire these signals. The high precision and low background noise of the Dewesoft system were critical in accurately capturing these subtle stimuli.

Spectral Analysis: The system performed spectral analyses. Such as Fast Fourier Transform (FFT), Power Spectral Density (PSD), and spectrogram analyses, on the acceleration signals. These analyses were essential to investigate the relationship between the spectral characteristics of the induced vibrations, the perception of textures, and the topographies of the surfaces.

Development of the Tactile Rendering Device: In the creation of the tactile rendering device, the Dewesoft system was again instrumental. The device, which included an electro-active polymer piezoelectric actuator, was driven by a signal processed and generated by Dewesoft. This setup enabled the simulation of tactile stimuli previously measured during surface exploration.

Verification and Calibration: The Dewesoft system verified the accuracy of the tactile rendering device. It helped in comparing the acceleration signal measured on real surfaces with that recovered using the device, ensuring the correct reproduction of vibrational tactile stimuli.

Integration with EEG Measurements: In the later stages of the project, when the team collaborated with neuroscientists, Dewesoft’s system was used not only for measuring mechanical stimuli but also for integrating these measurements with electroencephalographic (EEG) signals. This integration was crucial for understanding the complete chain of tactile perception from physical touch to brain response.

Summary

In summary, this blog details a groundbreaking project in virtual reality, aiming to simulate the sense of touch. This involves understanding the mechanics of tactile perception, developing a device to recreate these sensations, and exploring the neurological aspects of touch.

The project’s success could have wide-ranging applications in various fields, from entertainment to medical technology.

The Dewesoft system was a pivotal tool in this project. Facilitating the accurate measurement and analysis of mechanical stimuli associated with tactile perception. Therefore, playing a key role in the development and validation of the tactile rendering device.

You really must read this original blog to get a full understanding of the possibilities. Read it now or contact us to learn more about Dewesoft’s fantastic range of products.