EON Instrumentation has discovered a need to transport two independent HD video streams, where the pair represents a 3D image. Below is a technical explanation on how images become 3D. Also how the impacts of streaming video through various transport mediums can cause the frame rate to significantly different that affects our perception.

How our brain views a 3D image

This is different than interleaving odd even frames; left-right-left-right. It is two independent views of the same object in real time with a stereo lens spacing; two independent cameras. This is how we all see, two independent views with stereo spacing that our brains turn into 3D space perception.

What about Video HD over the Cloud?

However, when we insert a HD video streams into Ethernet or a radio transport system, the video compression is needed to reduce the bit rate to fit within the transport channel. In either method, transport may be point to point or networked. When networks are involved, the pathway from source to destination is typically variable. Variability worsens when inserting packetised video to the cloud. When inserting into radio, the bit error rate (BER), atmospheric effects and any relay channels (nodes) along the way may also vary the path length.

Variable Latency in compressed video

Compression, pathway length and network node hops introduce variable latency in each stream. Viewability is different in each. Therefore, latency in a compressed video transported in these ways can vary 100 milliseconds to several seconds and can distort how we view the image. For example, compression/decompression alone introduces a variable latency. This can vary from 60-500 milliseconds and is influenced by scene variability from frame to frame.

The group of pictures (GOP) settings of the MPEG compressor is the processing power of the source and destination computing systems. Including system buffering requirements and BER. If the source is video at 60 frames per second (FPS), latency in frames may vary from 3 to 10 frames. The transport parameters may add as much as seconds to CODEC latency depending on other traffic, BER (results in retry-resend delays), pathway length, node hops and more. These latencies may also be 10s of milliseconds to 100s of milliseconds. Therefore, the transport latency variation in terms of frames at 60 FPS may be from 1-10 frames or more.

If a standalone video stream is involved, the latency properly buffered can be managed to provide a steady decoded video output at the source frame rate, but delayed. Buffering absorbs the variability in latency to avoid dropped and freeze frames. We all have experienced dropped and frees frames. Especially when viewing streaming video. For example, “buffering…” messages and shuddering in the video. Even so, it works for a single stream image.

3D Images require 2 time correlated images viewed together

However 3D imaging requires 2 time correlated images viewed together. If one eye views an image that is 2-20 frames older than the other, the 3D image will be hard for the viewer to ingest and correlate to a clear perspective view of the object.

Correlated HD SDI 3D Imaging System Solution

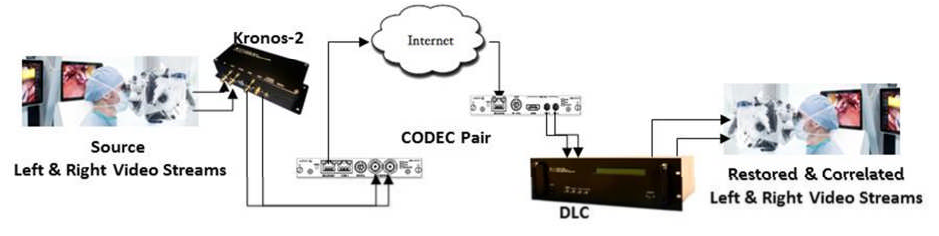

EON believes there is a solution. That can dynamically correlate two independent video streams without specialised CODECs, network data or setup. One of the elements is a two- channel version of EON’s KRONOS Time stamper. Kronos can receive HD-SDI video from a source and will embed an accurate KLV microsecond time stamp on each frame.

The Dynamic Latency Compensator (DLC) IPC is used in 2 channel form. This element reads the microsecond timestamps of each incoming HD-SDI video stream. Then it compares it to a locally synchronised time; either GPS or NTP. The Dynamic Latency Compensator is able to use these time measurements to generate a pair of SDI outputs that represents the original 3D imagery with 0-1 differential frame error. In addition, it can also measure the average latency from source to destination frame to frame. A real time and accurate latency measurement can assist with managing man-in-the-loop control. In man-in-the-loop situations a latency magnitude beyond 200 milliseconds can result in unpredictable and dangerous human control.

CODECs used for this solution can be H.264/H.265 CODEC pairs. Therefore, targeting bit rates as low as 6 megabits/sec1. However, they must reliably preserve the KLV metadata timestamp. Both during the encoding and decoding back to a pair of SDI streams. These CODECS are available from multiple sources.

Contact us and let’s collaborate to deliver a correlated HD-SDI 3D imaging system for your application.